[WEBINAR] Q&A: Facing Contention ~ How to Detox Public Engagement

Belmont

Engagement

They said they had 3,500+ touch points and 30,500+ data points. How did they count these and did it include the website unique views?

The touch points included participation in public meetings, committee meetings (which were open to the public), the MetroQuest survey, and the website unique views. The data points were calculated as part of the analysis and summary of the engagement activities.

Would have liked to understand whether the municipality participated in social media forums or was just an observer

The City was an active participant in developing and executing the engagement strategy. The City prefers to utilize surveys, comment forms, email, phone, website (www.belmontbridge.org), public meetings and traditional press to solicit public comment and provide information. Social media forums pose challenges – amount of staff time to monitor/answer questions, can perpetrate spreading misinformation provided by commentators, possibility to be dominated by opponents or grandstanders, etc.

Budget

Just curious about the budget to accomplish the project.

First design effort began with $14.5 million and focus on utility (improving/adding multi-modal features, improved landscaping). Due to public response and Council decision to pursue Enhanced Bridge Concept, budget was increased by $9 million with local funding & state grant to expand scope to include more design & enhanced aesthetics in addition to utility.

What was budget for public engagement?

The public engagement budget was approximately $400,000, which included the review of past design effort materials, development of the engagement strategy, project branding, website creation/upkeep, 6 technical committee meetings, 6 steering committee meetings, 3 sets of meetings with 5 focus groups, 3 community events (including a multi-day charrette), the MetroQuest survey, and presentation and hearings associated with the formal approval of the recommended design.

Contention

For the Belmont Bridge team, I assume that hostile groups still appeared. Would you tell me how you countered those groups with the information gained through your engagement plan? Or did you counter them?

We definitely encountered groups and individuals focused on a narrow part of the overall design. To meaningfully engage these groups, we followed many of the best practices discussed during the call, including:

- Worked in partnership with the City to identify known opposition and their viewpoint/objective

- Listened to their concerns without allowing them to be disruptive. (These groups need to feel their input is genuinely valued but also measured against the project constraints, design program, and their other facts and opinions.)

- Developed memos/material to directly and clearly respond to concerns and state project team’s position documenting data collected/regulations/standards/practices considered. Materials used at later meetings and posted online.

- If the process is sound, it will either win them over, or make it clear their views are not supported by the community at large, in which case they tend to fade out of the process

- The City regularly engages with a local facilitator with credibility in the community to help manage disruptive voices at meetings.

Process

In the Charlottesville example, what was the City’s level of involvement in the public participation process? Was it largely handed off to the consultant, or did staff have a significant role?

Staff played a significant role in establishing the engagement process and provided an important local voice and perspective during preparation. The City requested the consultant team facilitate meetings so technical experts could directly interact with the public/committees, which is appreciated in this community. Staff, of course, participated in each event and would “step in” to answer the tough questions/provide direction when necessary.

Can you discuss community values were captured and translated?

Community values were captured during early engagement activities, including the initial steering committee meeting, the Mobility Summit, and the MetroQuest survey. It was important to state why community values were being documented and explain these values in clear and concise language.

Did you use tools to translate technical drawings such as BIM?

No

Were the images used for weighing aesthetic preferences realistic renderings for what the project would look like or stock photos? If stock photos, did the public expect the project to look like those pictures?

Stock photos were used for some design elements or themes for early engagement prior to development of conceptual designs. We made it clear that the intent of the images was to gauge where the community aligned relative to specific themes and values, and did not represent design intent.Once a preference for “look” was established, for this project the preference is for contemporary with limited “funky” details, examples are being refined through the design process to reflect City Standards (light fixtures) or for proposed treatments that can be accomplished with the project (bridge abutment cladding, railing, etc.)

Results

If you had to do it all over, what would you do differently?

Knowing the difficult project history, we view this effort as very successful in terms of process and outcomes. Ordering better weather for our outdoor event would have been nice but did not hinder participation.

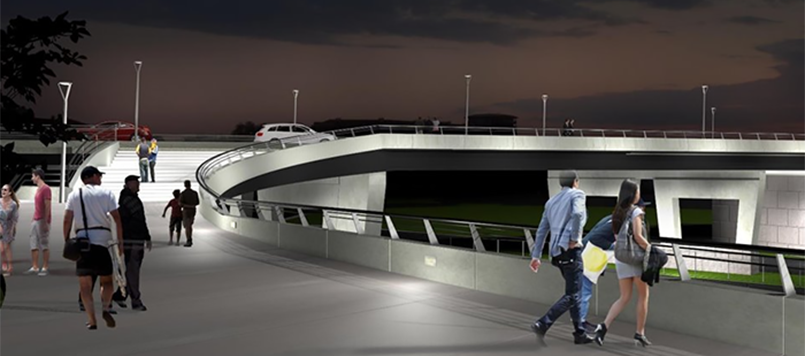

What was the outcome for the bridge? Show us the final design.

Portions of the preferred design alternative was shown in the presentation and is attached. A Design Public Hearing is being scheduled for May 2018 with construction in 2019.

Questions and Responses

There were so many questions that came in during our live webinar that we were unfortunately unable to answer them all. We have since had the opportunity, along with the help of our guest speakers: Jeanette Janiczek from the City of Charlottesville with Jonathan Whitehurst and Sal Musarra from Kimley-Horn, to answer these questions. Thank you everyone who participated for such compelling questions!

General Questions

I would like to share this with a client. Will there be a recording available later?

Yes, the recoding is now available on-demand. Please feel free to share the link with anyone that you feel would benefit from the information. Here it is: https://metroquest.com/webinars/belmont-bridge-kimley-horn/

MetroQuest Questions

How do you prevent duplication of comments/feedback? Can you monitor if people from outside the region are participating?

MetroQuest has tools and techniques to identify and help mitigate ballot stuffing in order to protect the integrity of the results. It’s very rare but, if it happens, these tools allow potential abuse to be easily recognized and nullified. The system also tracks the location of participants automatically so clients can be confident that participants are local.

MetroQuest looks like effective public participation software. How can I learn more about costs?

I apologize for not providing more in-depth information about MetroQuest. Due to the educational nature of this session I didn’t feel that it was appropriate. Pricing for an annual subscription for MetroQuest depends on the size and nature of your organization. I would suggest you connect with Derek Warburton at MetroQuest (by email to derek.warburton@metroquest.com, or toll free phone: 1-855-215-0186.

Can you tell me more about the smart phone survey tools you showed?

The images of the smart phone survey tools were from the Belmont Bridge project.

This is how MetroQuest looks for participants using smart phones. MetroQuest automatically senses the screen size of participants and adjusts to ensure that the experience is attractive and useful on their device.

How can we create safe online spaces? We’ve gotten sadly familiar with trolls from around the world barging into our virtual civic space. People are much more nervous about online privacy and protection from personal repercussions than they used to be, with good reason.

MetroQuest has been specifically designed for privacy and to ensure that people feel safe and not subjected to trolls or other biasing or intimidating forces online. People are free to participate anonymously and there are no open forum comment areas which can attract trolls.

Excellent tools! Is there a template available?

MetroQuest comes with a suite of templates for various kind of engagement (priority ranking, visual preferences, budgeting, map input, scenarios, etc). You simply select the templates that you wish to use, add in your text and images and launch it to the public.

I represent a consulting firm and am wondering if it is better for us to subscribe to a service to assist with public engagement, or have our communities sign up?

That’s a good question. I can speak only to our experience with MetroQuest. There are many consulting firms and government agencies that make good use of an annual subscription. The advantage to consulting firms is that having a subscription gives them a competitive advantage in the services they offer and allows them to provide better results for their clients. Spread across many projects, the cost per project will be small. For agencies the advantage they talk about is to be able to use online engagement on even the small projects that often have very small budgets and little or no consultants. These smaller and less glamorous projects are as in need of public input as many larger projects and benefit greatly from the efficient way online tools can extend the breadth and reach of outreach. I’d say that if you have an opportunity to conduct outreach 3 or more times per year it makes sense to consider a subscription.

Best Practice Questions

Great session. Can you talk more about how to specifically engage vulnerable or under-represented populations?

Yes, indeed, engaging environmental justice communities, non-English speakers, people with limited literacy or access to technology, people who are vulnerable or have mobility challenges and many other groups who are traditionally under-represented is an important challenge. As you might guess, it’s about much more than ensuring that you offer your engagement in different languages. While there are several design guidelines to ensure that online tools are more likely to be effective for engaging these vulnerable people a big part of the solution is to ensure that your engagement has an effective “go to them” strategy using pop-up engagement. Whether you set up a table with iPads, paper-based surveys or voting dots on boards, by picking events carefully, you can effectively target underrepresented audiences. The staff who attend can assist people as needed to accommodate wide diversity of participants.

Much of the dialog on the Environmental Justice topic is focussed on language. I think this is much too narrow. Yes, engagement should be offered in multiple languages but that does not mean that people from different cultural backgrounds will participate. I think the most effective way to really engage these groups is by first engaging the leaders in their community. These community leaders are already trusted and sometimes are the only effective way to mobilize participation.

How key is the educational aspect where you inform the public of things that they typically don’t include in their view on a project or specific project elements.

Public input tainted by misinformation or lack of awareness of trade-offs and constraints can be worse than useless. It can lead to increasing community frustration. For this reason, the educational aspects of engagement are an integral part of the success for the project, particularly on contentious projects. The education can help broaden the perspective of participants to consider elements that they might not have considered, gain an appreciation of opposing views (empathy), and ensure that the input gathered is a true reflection of public preferences.

As staff, how do you balance using good policy to override community consensus?

This is a tough question. The policy should serve the public. If there is consensus against the policy presumably either: the public does not understand the implications of the policy and how it benefits them, the “consensus” is not truly representative of the broader community, or it’s not a policy that is in the best interests of the community. In my experience it’s typically the first two possibilities and the most effective response is to mount an educational engagement campaign and seek to reach a wider cross-section of the community. If it’s the latter then the policy itself might need to be re-examined.

How does one handle an influential city official who wants to advance his or her individual preference?

This is best countered by widespread and convincing public input. If the official continues to advance his or her position in the face of public preferences to the contrary, the official should be asked to defend their position in a transparent process.

I would like tips for how to correct public misinformation campaigns.

Misinformation campaigns can be difficult. It’s easy to get drawn into an unwinnable fight that makes the agency look defensive. My best advise is to make sure that people know about participation opportunities that you are organizing and that you welcome an open discussion about the topic. I have often seen that erroneous information get corrected by members of the public. It can seem too self-serving if agency staff are the only ones doing the correcting.

One primary challenge we face is the lack of trust in the data or the need for change because they don’t trust local government based on poor public processes conducted in the past. How might that legacy of mistrust be addressed?

To answer this properly could take a whole webinar. In a nutshell, trust takes time to build and a minute to lose. My top advice: admit that it’s a challenging situation with conflicting interests upfront, tell them about your constraints, do everything you can to demonstrate that you are hearing them and report back frequently about how public input is affecting the outcome. Do this consistently and trust will build. If you can’t comply, don’t worry. You don’t have to give in to build trust. Transparency is the key.

What are some strategies to prevent a very vocal minority from dominating a process and intimidating other participation?

The best defence against a vocal minority is to add the voice of the silent majority. Public engagement can be designed to eliminate or minimize opportunities for anyone to dominate the process. Try using table discussions instead of not plenary sessions with a mic in the middle. Try online engagement without open forum that invite trolls to grandstand, bias or intimidate others.

How do you engage an uniformed and apathetic public?

We touched briefly on this in the webinar and it is covered in more detail in this session focused on best practice. The main advice is to make it dramatically easier to participate by making it fast, fun and easy. Online tools are the most effective way to accomplish this but also setting up pop-up engagement tables at community events is another way to engage people who would otherwise not participate. As mentioned, it’s important to weave education into the engagement because, as you suggest, most people are not well informed on government policies or planning matters.

What is the most effective way to engage the “general public” to get meaningful input when you have limited monetary resources and time? (i.e. the low to moderately motivated groups)

Because face to face engagement is labour intensive, online engagement can be effective in both saving costs as well as engaging the moderately motivated groups. Be careful to keep your online engagement short and as easy as possible. Keep in mind that people who are less motivated are also not as aware of the issues so some education should be built into the experience to gather, as you say, meaningful input. This on-demand webinar on-demand webinar covers this topic in more detail.

What is the best and most efficient way to counter residents who say: “I don’t believe that data. I’ve done my own research and it completely contradicts what you’re saying.” Sometimes it appears that no amount of data will please people.

As discussed in the guidebook, it’s important to avoid using data to address emotionally charged arguments. This is mistake often seen in case studies. If people are angry or frustrated no data will help them calm down. Practitioners recommend starting with the emotions. Probe for information about how they are feeling, what scares them about a project, how they feel they will be impacted, and make sure they know that you have heard. It was suggested in the webinar to talk about priorities. This begins to focus the dialog on what outcomes people want and what consequences people wish to avoid.

This kind of discussion about priorities and values can be a powerful tonic for emotional participants. Once people feel heard and see that their priorities are represented (among others) the transition to talk about solutions can begin. It is at this stage where data can play a role. If people have other contradictory data it’s best to be open to considering it letting them know that your research says something different but that you are open to looking at their findings as well.

Are there metrics out there based upon a municipality’s population to the ratio of the ideal range of public participation to achieve displayed as a percentage?

There isn’t a consensus about the ideal percentage but everyone seems to agree that more is better, especially politicians and decision makers but also community members. According to statisticians who deal with community population polling, it’s typically less about a percentage of the population than it is about a number of people. Most information suggests that the number is about 30 people per cohort. What this means is that if you engage 30 people in a particular cohort and they for example rank ordered 8 items in order of preference, the order of the items would not change if you go on to engage 3,000 more in that same cohort. In our experience with MetroQuest this seems to be the case and it does not seem to matter if it’s an engagement in a town of 5,000 or a Metropolitan region. The trick is then, how many different cohorts or demographic groups do you have in your community with potentially differing views or needs related to the project. When add it up, needing 30 people from each different age groups, neighborhoods, economic groups, etc it becomes evident quickly that engaging large numbers is rather important if you would like to truly represent the diversity of the community.

I would like more info on public engagement/public comment on routine planning items. Development plans and rezonings that do not engage the public beyond the legal requirements of public notice and the public hearings. Those hearings also often get toxic, people are very emotional, and planners tend to get blamed for projects that they are required to process. I would love more info from this type of perspective.

I feel your pain. I lament the fact that big projects seem to get all the attention and that routine projects get little time on the podium. I think the best practices equally apply to routine projects. Bare minimum engagement that does not go beyond the legal requirements typically engages a very narrow cross-section of the community. Since it’s only the most highly motivated people who attend these required public hearings I’m not surprized that you find that they often get toxic. The moderate voices are comfy on their couches doing other things. It’s a big mistake to be too swayed by these minority voices as they seldom represent the opinions of the broader community.

The problem is often that these more routine projects do not have a big engagement budget so running an extensive outreach process is often impossible. Online community engagement tools like MetroQuest are typically used on an annual subscription that allows for unlimited use. These be an effective way to dramatically broaden the engagement for even routine projects cost effectively since there are no incremental fees for using it more frequently.

Most other community engagement tactics involve more labour but pop-up engagement can be done with little cost. This can be a great way to fill in some of the gaps for underrepresented demographics by piggy-backing on existing community events.

I’m keen to know more about that diagram showing the extremes show up while people unsure or in the middle do not. Fits my intuition. How can this be useful for outreach, engagement planning, and cross-citizen civility efforts?

I think the primary lesson in that diagram is that the high barrier to participation activities (e.g. public meetings) ensure that you hear mainly from the most polarized people. For that reason it’s best to plan those events to allow people to speak their minds and hear the perspectives of others who may hold a different view. In order for the loud voices to not be allowed to disrupt the entire meeting, most facilitators recommend table conversations with 6-8 people maximum with an agreed upon set of ground rules.

The other main lesson is the need to include very low barrier options for participation such as the fun and interactive web tools used by the Belmont Bridge team. These are better suited to engage the more moderate voices and, if they are designed carefully, can be immune to tampering or being dominated by trolls.

Do you have ideas for getting planning out to people even when’s there’s not a specific project or vote going on. For example, I notice that the census bureau shows up at street fairs etc.

I really like pop-up engagement as a way to reach people. By going to an event that is planned already and popping up some kind of simple display (table or boards on easels) it’s possible to reach people who would otherwise never find you.

Can you share a case study where a meeting went off the rails and was salvaged?

Sadly, people tend to shy aware from talking about meetings that go off the rails even if, in the end, they were salvaged. I think there is a great deal to learn from these situations. I wrote an article about one of my “fiascos” here https://metroquest.com/what-i-learned-when-an-angry-mob-destroyed-my-public-meeting/.

I wasn’t able to salvage that particular meeting but I was able to apply some techniques in subsequent meetings on the same project that worked well. Based on the interest in the topic, it seems like a session on managing public meeting anger would be very useful. Stay tuned!

There was not much stated about whether a municipality should just observe the social media forums or should they participate with information?

In the guidebook it is recommended that you perform a social media scan as a passive “ear to the ground” manner. Too often agencies make the mistake in assuming that factual information is all that is needed to calm emotional people. It seldom works so I would advise caution with intervening in the dialog. It can be effective to ask for clarifying information, let them know that you are listening and share any information about how people can constructively participate in the process.

Is it patronizing to lay out the rules of respectful public discourse at the beginning of meetings?

Not at all. This is a best practice that is outlined in the guidebook. This can be done very diplomatically. For example: “Thank you everyone for taking time out of your schedule to be here. We’re here to listen and we’d like to hear from everyone. To that end, we’d like to get agreement on a set of ground rules that will allow everyone an equal opportunity to participate, even the quietest among us.” Do this as early as possible and refer back to it throughout the session. The motivation is not to silence any loud voices but rather to be fair to quiet ones.

At a meeting, how to you diffuse a speaker who is angry and move the discussion to the purpose/focus of the meeting by not getting totally off-track?

This topic is worthy of an entire session so stay tuned for upcoming sessions. In short, when participants get angry it’s best to acknowledge the emotion and invite them to talk more about their priorities and objectives. Draw it out with questions like, can you share with people why that is so important to you? Make sure they know you’ve heard them and understand their concern and then ask if others share that concern. When you are seen as approaching the subject as opposed to recoiling from it or defending a position the mood should shift for the better. When it is more settled it’s then time for a gentle pivot to opposing views. Ask if anyone can think of the what people with opposing views might say. Notice how I didn’t ask if anyone disagrees. That’s too intimidating for many. From there often the dialog can often proceed in a much more constructive manner.

In general, it’s best in emotionally charged situations to avoid plenary discussions with a room full of people. Table discussions with 6-8 people are preferred.

Psychological and behavioral pointers to keep in mind when facing contention – for instance don’t sit higher than the public (making them feel inferior).

There are a few ideas in the guidebook on this topic. The one that I feel is most important is to admit at the beginning of the session that it’s a difficult situation with valid yet opposing views. The biggest mistake I’ve seen repeated too many time is for the project team to begin the meeting essentially promoting or selling a project. This stance is typically irritating to people with opposing views.